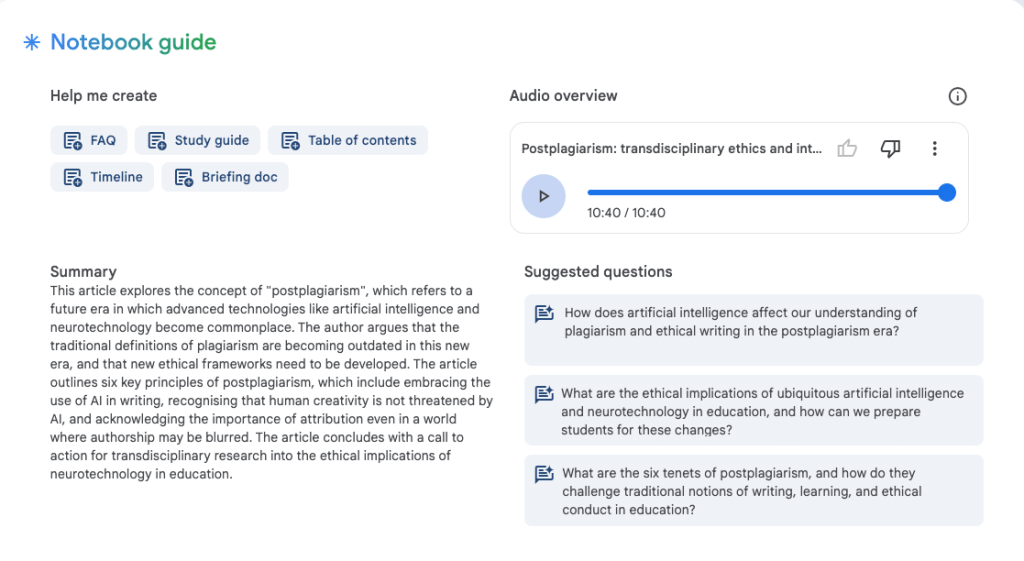

This weekend I have been playing around with Google’s NotebookLM. This is a brand new, completely free service that can help users learn content in a variety of ways. It is powered by Gemini 1.5 Pro and it’s worth checking out.

To test it out, I uploaded a copy of “Postplagiarism: Transdisciplinary ethics and integrity in the age of artificial intelligence and neurotechnology” in .pdf format.

Here is what NotebookLM came up with:

Audio Conversation

Perhaps the most impressive of the outputs is a podcast-style audio conversation that is completely AI-generated. You can listen to the Postplagiarism conversation here.

Postplagiarism: Navigating Ethics and Integrity in the Age of AI: Study Guide

Summary

This article introduces the concept of “postplagiarism,” an era defined by the seamless integration of artificial intelligence (AI) and neurotechnology into everyday life, including education. Eaton explores the implications of this integration for academic integrity, arguing that traditional definitions of plagiarism may become obsolete as AI blurs the lines of authorship.

The author posits six key tenets of postplagiarism:

- Hybrid human-AI writing will become the norm: Co-created content will make distinguishing human and AI input increasingly difficult.

- Human creativity is enhanced: AI serves as a tool to augment human creativity, not replace it.

- Language barriers disappear: AI translation tools have the potential to facilitate cross-cultural communication.

- Humans can relinquish control, but not responsibility: While AI can assist in content creation, individuals remain accountable for accuracy and ethical use.

- Attribution remains important: Acknowledging sources and intellectual contributions remains crucial, with evolving considerations for Indigenous knowledge systems.

- Historical definitions of plagiarism no longer apply: Shifting technological landscapes necessitate new frameworks for ethical engagement with information and knowledge creation.

Eaton challenges Chomsky’s view of AI as “high-tech plagiarism,” arguing instead that it demands a reevaluation of existing definitions of plagiarism within the context of rapidly evolving technological capabilities. She advocates for pre-emptive research into the ethical implications of AI and neurotechnology, particularly commercially available brain-computer interfaces (BCIs), highlighting the need for transdisciplinary collaboration to address these complex issues.

The article concludes with a call to action, urging educators to prepare students for a future characterized by ubiquitous AI and neurotechnology, emphasizing ethical considerations and societal impact.

Quiz

Instructions: Answer the following questions in 2-3 sentences.

- What does Eaton mean by “postplagiarism”?

- Why does Eaton believe that AI will make traditional definitions of plagiarism obsolete?

- What is Eaton’s stance on Chomsky’s characterization of AI as “high-tech plagiarism”?

- Explain the concept of “hybrid human-AI writing” and its implications.

- According to Eaton, how does the emergence of AI impact human creativity?

- Why does Eaton believe that attribution remains important in the age of AI?

- What ethical concerns does Eaton raise regarding the use of neurotechnology in education?

- Why does Eaton believe that educators were caught off guard by both the COVID-19 pandemic and the emergence of AI like ChatGPT?

- What type of research does Eaton advocate for in relation to AI and neurotechnology in education?

- What is Eaton’s primary call to action for educators in light of the emerging postplagiarism era?

Quiz Answer Key

- “Postplagiarism” refers to an era where advanced technologies like AI and neurotechnology are fully integrated into everyday life, including education. This integration significantly impacts how we teach, learn, communicate, and engage with knowledge.

- Eaton argues that traditional definitions of plagiarism, often focused on verbatim copying, become inadequate in the face of AI. This is because AI tools enable the creation of sophisticated, original content, blurring the lines between human and machine authorship and challenging the notion of plagiarism as we know it.

- Eaton disagrees with Chomsky’s view, arguing that AI should not be simply dismissed as “high-tech plagiarism.” Instead, she calls for a nuanced understanding of AI’s capabilities and a re-evaluation of existing plagiarism definitions within the context of evolving technology.

- “Hybrid human-AI writing” refers to the collaborative process where humans and AI tools work together to create content. This collaboration makes it difficult to discern human input from AI-generated content, challenging traditional authorship and plagiarism detection methods.

- Eaton argues that AI enhances, rather than threatens, human creativity. She sees AI tools as resources that can augment human imagination and inspire new forms of creative expression, rather than replacing human ingenuity.

- Eaton stresses the continued importance of attribution in acknowledging intellectual debts and recognizing the contributions of others. In the age of AI, this includes responsibly crediting both human and AI sources, acknowledging the collaborative nature of knowledge creation.

- Eaton raises ethical concerns related to privacy, consent, and equitable access when it comes to neurotechnology in education. She particularly highlights concerns regarding commercially available brain-computer interfaces (BCIs) and their potential for misuse or exacerbating existing inequalities.

- Eaton suggests that educators were caught off guard by both the rapid shift to online learning during the COVID-19 pandemic and the sudden rise of AI tools like ChatGPT. She attributes this to a lack of preparedness for large-scale disruptions and the exponential pace of technological advancement.

- Eaton advocates for pre-emptive, transdisciplinary research on the ethical implications of AI and neurotechnology in education. This research would involve collaboration between academics, policymakers, and industry experts to address complex questions surrounding equitable access, privacy, and the impact on learning and teaching practices.

- Eaton calls on educators to proactively prepare students for a future deeply integrated with AI and neurotechnology. This preparation involves fostering critical thinking skills, ethical awareness regarding technology use, and adaptability in the face of evolving technological landscapes.

Essay Questions

- Analyse Eaton’s argument that traditional definitions of plagiarism are no longer applicable in the age of AI. How does AI challenge existing notions of authorship and originality?

- Discuss the ethical implications of commercially available neurotechnology in education. What are the potential benefits and risks associated with using such technologies in learning environments?

- How should educational institutions adapt their policies and practices to address the challenges and opportunities presented by postplagiarism?

- In what ways can AI be used to enhance, rather than replace, human creativity and critical thinking skills in educational settings? Provide specific examples to support your arguments.

- Discuss the potential impact of AI-driven translation tools on language learning and cross-cultural communication in education. How can we ensure equitable access to these tools and address concerns related to language diversity and cultural representation?

Glossary of Key Terms

- Postplagiarism: An era characterized by the seamless integration of advanced technologies like AI and neurotechnology into everyday life, impacting how we learn, communicate, and create knowledge.

- Artificial Intelligence (AI): The ability of a computer or a robot controlled by a computer to perform tasks that are usually done by humans because they require human intelligence and discernment.

- Neurotechnology: Technology that interacts directly with the brain or nervous system, encompassing tools for monitoring, recording, or modulating neural activity.

- Brain-Computer Interface (BCI): A device that establishes a direct communication pathway between the brain and an external device, often a computer, enabling the control of the device using brain signals.

- Hybrid Human-AI Writing: Collaborative content creation involving both human input and AI-generated text, blurring the lines of authorship.

- Large Language Model (LLM): A type of AI trained on a massive dataset of text and code to generate human-quality text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

- Decolonization: The process of undoing the harmful effects of colonialism, particularly in educational contexts, challenging Eurocentric perspectives and promoting Indigenous knowledge systems.

- Knowledge Caretaker: An individual or community responsible for preserving, transmitting, and respectfully engaging with knowledge, particularly within Indigenous cultures.

This study guide provides a comprehensive overview of the article, aiding in your understanding of the key concepts and arguments presented. Remember to utilize the quiz and essay questions to test your knowledge and delve deeper into the complexities of postplagiarism.

Postplagiarism FAQ

1. What is postplagiarism?

Postplagiarism refers to an era where advanced technologies, such as artificial intelligence (AI) and neurotechnology like brain-computer interfaces (BCIs), are deeply integrated into our daily lives, including education. This integration will significantly impact how we teach, learn, and interact.

2. How will AI impact academic writing?

AI writing tools are becoming increasingly sophisticated, making it difficult to distinguish between human and AI-generated text. This rise of hybrid human-AI writing presents challenges to traditional notions of plagiarism. While AI can enhance creativity by assisting with writing tasks, it also raises concerns about authenticity and the need to uphold ethical writing practices.

3. Will AI make learning languages irrelevant?

While AI translation tools are becoming more powerful, they won’t render language learning obsolete. Although AI can facilitate communication across language barriers, it cannot replace the cultural understanding and nuanced communication that comes with learning a language.

4. Can I use AI to complete my assignments?

Even with AI assistance, students are ultimately responsible for their academic work. Using AI to complete assignments without proper attribution or understanding can be considered a form of academic misconduct. Educators have a responsibility to adapt assessment methods to ensure students demonstrate genuine learning.

5. How does postplagiarism affect attribution practices?

While traditional citation methods remain important, postplagiarism challenges us to rethink how we acknowledge and value knowledge sources, particularly Indigenous knowledge systems often overlooked in standard academic practices. Respectful attribution in the postplagiarism era requires moving beyond technical citations to engage deeply with the works and ideas of others.

6. Are current academic integrity policies equipped to handle AI?

Existing definitions and policies related to plagiarism may need to be reevaluated in the age of postplagiarism. The lines are becoming blurred, demanding a more nuanced understanding of academic integrity that goes beyond simply detecting copied text.

7. What about neurotechnology? How will it impact education?

The emergence of neurotechnology, particularly BCIs, brings both opportunities and challenges. While it has the potential to revolutionize learning and assist individuals with disabilities, it also raises ethical concerns regarding privacy, autonomy, and potential misuse for academic cheating.

8. What can educators do to prepare for the postplagiarism era?

Educators should actively engage in discussions surrounding AI and neurotechnology in education. This includes researching ethical implications, promoting responsible AI use, adapting teaching practices, and fostering critical thinking skills in students to navigate this evolving landscape. Collaboration between educators, policymakers, and researchers is crucial to address these challenges proactively.

Sarah’s Reflections on NotebookLM

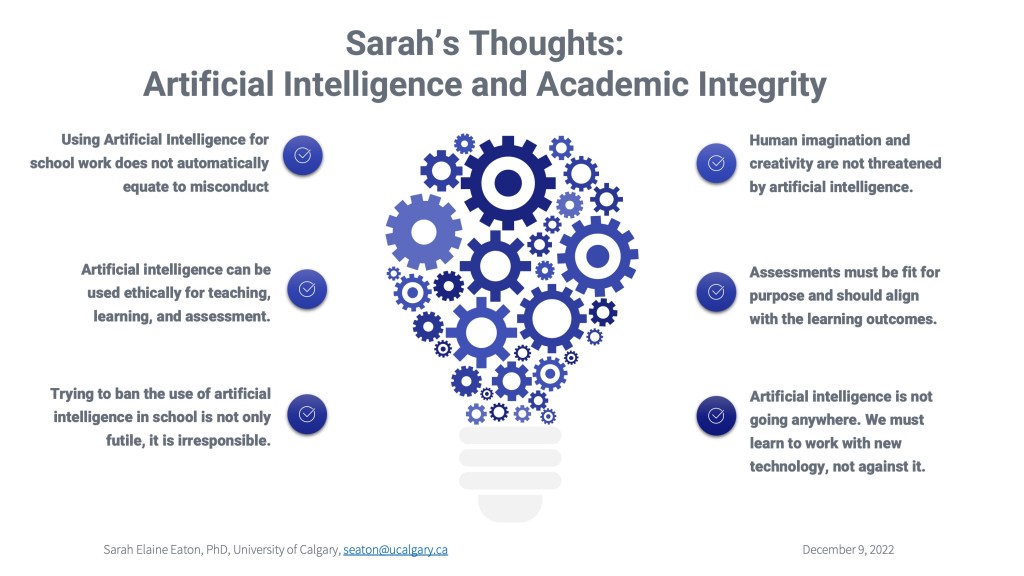

Notebook LM is a game-changer for teaching and learning. Students can upload any material to the app and generate content in plain language in both text and audio format.

NotebookLM is a game-changer for teaching and learning. Students can upload any material to the app and generate content in plain language in both text and audio format. There are benefits and drawbacks to any technology and here are some that come to mind for NotebookLM:

Benefits

- Personalized Learning: NotebookLM can tailor learning experiences to individual students’ needs, pace, and preferences. It can provide personalized explanations, examples, and practice test questions. I like this aspect of NotebookLM because it allows learners to personalize their own learning experience, rather than having a teacher do it for them.

- Enhanced Engagement: By offering interactive and engaging content, NotebookLM can increase student interest and motivation by situating the locus of control for the learning with the student. I like this because the app can help to promote learner autonomy and agency. It can also facilitate collaborative learning through features like group discussions and shared notes.

- Accessibility and UDL: The tool can make learning more accessible to students with disabilities, learning difficulties or really, just any learner. It does this by providing the content in a variety of formats such as text-based summaries or the audio pod-cast style conversation.

- 24/7 Support: NotebookLM can be available to students at any time, providing a resource for independent learning and practice. No matter when a student prefers to do their learning,”just-in-time” tools like this meet learners where they are at, on their timeline, not the teacher’s timeline.

Drawbacks

- Lack of Human Interaction: Although NotebookLM can provide valuable support, it cannot fully replace the human connection and guidance that educators offer. The affective aspects of teaching and learning and the social connections, remain important.

- Dependency on Technology: Overreliance on NotebookLM could lead to technological issues and disruptions in learning. For example, students who are overly dependent on technology may struggle to adapt to situations where the tool is not available or appropriate. Tools like this may — or may not — help students to develop metacognitve skills and evaluative judgement. (For more info on assessment in the age of generative AI, check out this article by Margaret Bearman and Rosemary Luckin.)

- Perpetuation of Inequities: Students from disadvantaged backgrounds may have limited access to technology or to Internet connectivity, creating a digital divide and exacerbating educational inequalities. So, just as tools like this can enhance accessibility, they may simultaneously erode equity in different ways.

- Data Privacy Concerns: The collection and use of student data raise privacy concerns and require careful consideration of data protection measures. There are also questions about copyright and what happens when students upload work to which others hold the copyright.

- Potential for Misuse: NotebookLM could be misused by students to cheat or generate inaccurate content, requiring educators to implement appropriate safeguards. So, like any other technology, it can be used ethically, or unethically. Students may or may not know what is allowed or expected and so having conversations with students about expectations remains important.

Thank you to my friend and colleague, Dr. Soroush Sabbaghan, Associate Professor (Teaching) at the University of Calgary, for introducing me to NotebookLM a few days ago. I am keen to hear what learners and educators think of this tool.

References

Eaton, S. E. (2023). Postplagiarism: Transdisciplinary ethics and integrity in the age of artificial intelligence and neurotechnology. International Journal for Educational Integrity, 19(1), 1-10. https://doi.org/10.1007/s40979-023-00144-1

Related posts:

- 6 Tenets of Postplagiarism: Writing in the Age of Artificial Intelligence https://drsaraheaton.wordpress.com/2023/02/25/6-tenets-of-postplagiarism-writing-in-the-age-of-artificial-intelligence/

- The Use of AI-Detection Tools in the Assessment of Student Work – https://drsaraheaton.wordpress.com/2023/05/06/the-use-of-ai-detection-tools-in-the-assessment-of-student-work/

_________________________________

Share this post: Exploring Postplagiarism Using Google’s Notebook LM – https://drsaraheaton.com/2024/09/23/exploring-postplagiarism-with-google-notebooklm/

This blog has had over 3 million views thanks to readers like you. If you enjoyed this post, please “like” it or share it on social media. Thanks!

Sarah Elaine Eaton, PhD, is a faculty member in the Werklund School of Education, and the Educational Leader in Residence, Academic Integrity, University of Calgary, Canada. Opinions are my own and do not represent those of the University of Calgary.

Posted by Sarah Elaine Eaton, Ph.D.

Posted by Sarah Elaine Eaton, Ph.D.

You must be logged in to post a comment.