Artificial intelligence (AI) has moved from a futuristic concept to an everyday reality. Rather than viewing AI tools like ChatGPT as threats to academic integrity, forward-thinking educators are discovering their potential as powerful teaching instruments. Here’s how you can meaningfully incorporate AI into your classroom while promoting critical thinking and ethical technology use.

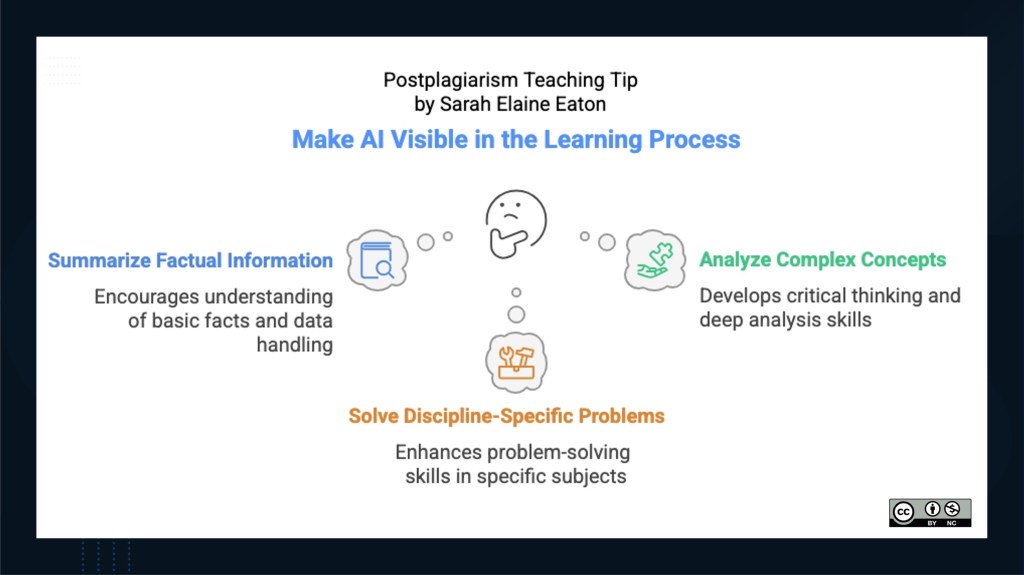

Making AI Visible in the Learning Process

One of the most effective approaches to teaching with AI is to bring it into the open. When we demystify these tools, students develop a more nuanced understanding of the tools’ capabilities and limitations.

Start by dedicating class time to explore AI tools together. You might begin with a demonstration of how ChatGPT or similar tools respond to different types of prompts. Ask students to compare the quality of responses when the tool is asked to:

- Summarize factual information

- Analyze a complex concept

- Solve a problem in your discipline

Have students identify where the AI excels and where it falls short. Hands-on experience that is supervised by an educator helps students understand that while AI can be impressive and capable, it has clear boundaries and weaknesses.

From AI Drafts to Critical Analysis

AI tools can quickly generate content that serves as a starting point for deeper learning. Here is a step-by-step approach for using AI-generated drafts as teaching material:

- Assignment Preparation: Choose a topic relevant to your course and generate a draft response using an AI tool such as ChatGPT.

- Collaborative Analysis: Share the AI-generated draft with students and facilitate a discussion about its strengths and weaknesses. Prompt students with questions such as:

- What perspectives are missing from this response?

- How could the structure be improved?

- What claims require additional evidence?

- How might we make this content more engaging or relevant?

The idea is to bring students into conversations about AI, to build their critical thinking and also have them puzzle through the strengths and weaknesses of current AI tools.

- Revision Workshop: Have students work individually or in groups to revised an AI draft into a more nuanced, complete response. This process teaches students that the value lies not in generating initial content (which AI can do) but in refining, expanding, and critically evaluating information (which requires human judgment).

- Reflection: Ask students to document what they learned through the revision process. What gaps did they identify in the AI’s understanding? How did their human perspective enhance the work? Building in meta-cognitive awareness is one of the skills that assessment experts such as Bearman and Luckin (2020) emphasize in their work.

This approach shifts the educational focus from content creation to content evaluation and refinement—skills that will remain valuable regardless of technological advancement.

Teaching Fact-Checking Through Deliberate Errors

AI systems often present information confidently, even when that information is incorrect or fabricated. This characteristic makes AI-generated content perfect for teaching fact-checking skills.

Try this classroom activity:

- Generate Content with Errors: Use an AI tool to create content in your subject area, either by requesting information you know contains errors or by asking about obscure topics where the AI might fabricate details.

- Fact-Finding Mission: Provide this content to students with the explicit instruction to identify potential errors and verify information. You might structure this as:

- Individual verification of specific claims

- Small group investigation with different sections assigned to each group

- A whole-class collaborative fact-checking document

- Source Evaluation: Have students document not just whether information is correct, but how they determined its accuracy. This reinforces the importance of consulting authoritative sources and cross-referencing information.

- Meta-Discussion: Use this opportunity to discuss why AI systems make these kinds of errors. Topics might include:

- How large language models are trained

- The concept of ‘hallucination’ in AI

- The difference between pattern recognition and understanding

- Why AI might present incorrect information with high confidence

These activities teach students not just to be skeptical of AI outputs but to develop systematic approaches to information verification—an essential skill in our information-saturated world.

Case Studies in AI Ethics

Ethical considerations around AI use should be explicit rather than implicit in education. Develop case studies that prompt students to engage with real ethical dilemmas:

- Attribution Discussions: Present scenarios where students must decide how to properly attribute AI contributions to their work. For example, if an AI helps to brainstorm ideas or provides an outline that a student substantially revises, how could this be acknowledged?

- Equity Considerations: Explore cases highlighting AI’s accessibility implications. Who benefits from these tools? Who might be disadvantaged? How might different cultural perspectives be underrepresented in AI outputs?

- Professional Standards: Discuss how different fields are developing guidelines for AI use. Medical students might examine how AI diagnostic tools should be used alongside human expertise, while creative writing students could debate the role of AI in authorship.

- Decision-Making Frameworks: Help students develop personal guidelines for when and how to use AI tools. What types of tasks might benefit from AI assistance? Where is independent human work essential?

These discussions help students develop thoughtful approaches to technology use that will serve them well beyond the classroom.

Implementation Tips for Educators

As you incorporate these approaches into your teaching, consider these practical suggestions:

- Start small with one AI-focused activity before expanding to broader integration

- Be transparent with students about your own learning curve with these technologies

- Update your syllabus to clearly outline expectations for appropriate AI use

- Document successes and challenges to refine your approach over time

- Share experiences with colleagues to build institutional knowledge

Moving Beyond the AI Panic

The concept of postplagiarism does not mean abandoning academic integrity—rather, it calls for reimagining how we teach integrity in a technologically integrated world. By bringing AI tools directly into our teaching practices, we help students develop the critical thinking, evaluation skills, and ethical awareness needed to use these technologies responsibly.

When we shift our focus from preventing AI use to teaching with and about AI, we prepare students not just for academic success, but for thoughtful engagement with technology throughout their lives and careers.

References

Bearman, M., & Luckin, R. (2020). Preparing university assessment for a world with AI: Tasks for human intelligence. In M. Bearman, P. Dawson, R. Ajjawi, J. Tai, & D. Boud (Eds.), Re-imagining University Assessment in a Digital World (pp. 49-63). Springer International Publishing. https://doi.org/10.1007/978-3-030-41956-1_5

Eaton, S. E. (2023). Postplagiarism: Transdisciplinary ethics and integrity in the age of artificial intelligence and neurotechnology. International Journal for Educational Integrity, 19(1), 1-10. https://doi.org/10.1007/s40979-023-00144-1

Edwards, B. (2023, April 6). Why ChatGPT and Bing Chat are so good at making things up. Arts Technica. https://arstechnica.com/information-technology/2023/04/why-ai-chatbots-are-the-ultimate-bs-machines-and-how-people-hope-to-fix-them/

________________________

Share this post: Embracing AI as a Teaching Tool: Practical Approaches for the Postplagiarism Classroom – https://drsaraheaton.com/2025/03/23/embracing-ai-as-a-teaching-tool-practical-approaches-for-the-post-plagiarism-classroom/

This blog has had over 3.7 million views thanks to readers like you. If you enjoyed this post, please ‘Like’ it using the button below or share it on social media. Thanks!

Sarah Elaine Eaton, PhD, is a Professor and Research Chair in the Werklund School of Education at the University of Calgary, Canada. Opinions are my own and do not represent those of my employer.

Posted by Sarah Elaine Eaton, Ph.D.

Posted by Sarah Elaine Eaton, Ph.D.

You must be logged in to post a comment.