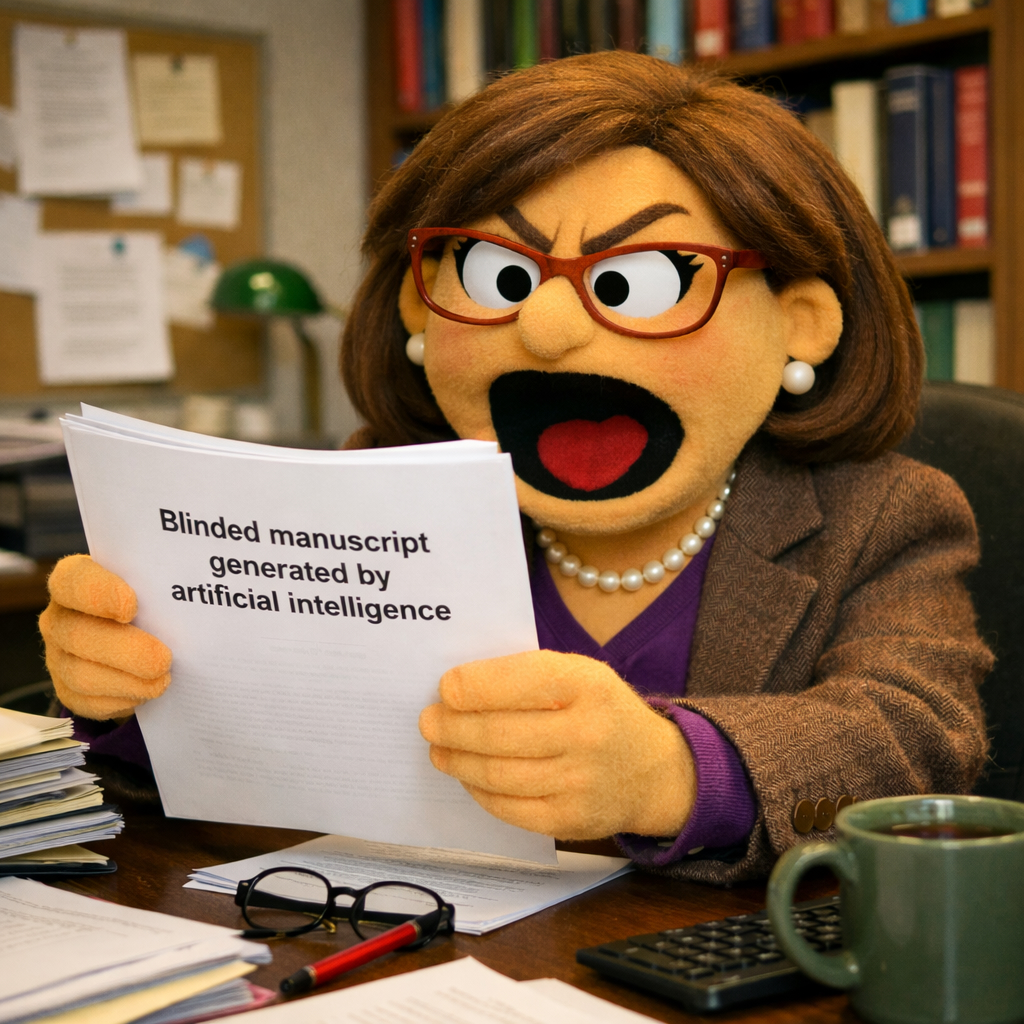

As the Editor-in-Chief of the International Journal for Educational Integrity, I have witnessed (and become super frustrated with) threats to academic publishing and research integrity from Gen AI. Don’t get me wrong, I am not opposed to AI, but I have been clear in my research and writing that technology can be used in good and helpful ways or ways that are unethical and inappropriate. Recently, our editorial office received a manuscript with the file name ‘Blinded manuscript generated by artificial intelligence.’

My reaction was, “Are you kidding me?! Well, that’s bold!” Although the honesty of the title may be rarity, the submission itself is symptomatic of a burgeoning crisis in academic publishing: the rise of ‘AI slop.’ Since the proliferation of large language models (LLMs), we have seen a dramatic increase in submissions. Now, I’m pretty sure that a portion of the manuscripts we are receiving are written entirely by AI agents or bots, sending submissions on behalf of authors.

As a journal editor, let me be clear: The volume of manuscripts you send out does not equate to the value to the readership. It is not that I oppose the use of AI carte blanche, but I do object to manuscripts prepared and sent by bots, with no human interaction in the process. If a manuscript does not bring value to our readers, it gets an immediate desk rejections, and for good reason.

The Problem with AI Slop in Research

Academic journals exist to advance the frontiers of human knowledge. A manuscript is expected to contribute new and original findings to scholarship and science. AI-generated papers, by their very nature, struggle to meet this requirement.

- Lack of Empirical Depth: AI excels at synthesizing existing information but cannot conduct original fieldwork, clinical trials, or archival research. It mimics the structure of a study without performing the substance of it.

- Axiological Misalignment: There is a gap between the automated generation of text and the values-driven process of human inquiry. Research requires a commitment to truth, ethics, and accountability, qualities a machine cannot possess.

- The Echo Chamber Effect: These submissions often present fabricated or corrupted citations or circular logic that offers little to no utility to the reader. They clutter the ecosystem without moving the needle on critical conversations.

Upholding the Integrity of the Record

Our editorial board remains committed to a rigorous peer-review process, but let’s be clear: the ‘publish or perish’ culture, now supercharged by Gen AI, is threatening to overwhelm the very systems meant to ensure quality.

If an academic paper submitted for publication does not offer an original contribution or if it lacks the human oversight necessary to guarantee its validity, it has no place in a scholarly journal. We in a postplagiarism era where the focus must shift from merely detecting copied text to evaluating the originality of thought and the integrity of the research process. Postplagiarism does not mean that we throw out academic and research integrity or that ‘anything goes’. We recognize that co-creation with GenAI may be normal for some writers today. But having an AI agent write and submit manuscripts on your behalf wastes everyone’s time.

To our contributors: scholarship is a human endeavor. We value your insights, your unique perspectives, and your rigorous labour. In the meantime, we will continue with our commitment to quality, and I expect that the journal’s rejection rate will continue to be high as we focus on papers that bring value to our readership.

______________

Share this post: Stop wasting my time! AI Agents Infiltrate Scholarly Publishing – https://drsaraheaton.com/2026/02/06/stop-wasting-my-time-ai-agents-infiltrate-scholarly-publishing/

Sarah Elaine Eaton, PhD, is a Professor and Research Chair in the Werklund School of Education at the University of Calgary, Canada. Opinions are my own and do not represent those of my employer.

Posted by Sarah Elaine Eaton, Ph.D.

Posted by Sarah Elaine Eaton, Ph.D.

You must be logged in to post a comment.