When we talk about academic integrity in universities, we often focus on preventing plagiarism and cheating. But what if our very approach to enforcing these standards is unintentionally creating barriers for some of our most vulnerable students?

My recent research explores how current academic integrity policies and practices can negatively affect neurodivergent students—those with conditions like ADHD, dyslexia, Autism, and other learning differences. Our existing systems, structures, and policies can further marginalize students with cognitive differences.

The Problem with One-Size-Fits-All

Neurodivergent students face unique challenges that can be misunderstood or ignored. A dyslexic student who struggles with citation formatting isn’t necessarily being dishonest. They may be dealing with cognitive processing differences that make these tasks genuinely difficult. A student with ADHD who has trouble managing deadlines and tracking sources is not necessarily lazy or unethical. They may be navigating executive function challenges that affect time management and organization. Yet our policies frequently treat these struggles as potential misconduct rather than as differences that deserve support.

Yet our policies frequently treat these struggles as potential misconduct rather than as differences that deserve support.

The Technology Paradox for Neurodivergent Students

Technology presents a particularly thorny paradox. On one hand, AI tools such as ChatGPT and text-to-speech software can be academic lifelines for neurodivergent students, helping them organize thoughts, overcome writer’s block, and express ideas more clearly. These tools can genuinely level the playing field.

On the other hand, the same technologies designed to catch cheating—especially AI detection software—appear to disproportionately flag neurodivergent students’ work. Autistic students or those with ADHD may be at higher risk of false positives from these detection tools, potentially facing misconduct accusations even when they have done their own work. This creates an impossible situation: the tools that help are the same ones that might get students in trouble.

Moving Toward Epistemic Plurality

So what’s the solution? Epistemic plurality, or recognizing that there are multiple valid ways of knowing and expressing knowledge. Rather than demanding everyone demonstrate learning in the exact same way, we should design assessments that allow for different cognitive styles and approaches.

This means:

- Rethinking assessment design to offer multiple ways for students to demonstrate knowledge

- Moving away from surveillance technologies like remote proctoring that create anxiety and accessibility barriers

- Building trust rather than suspicion into our academic cultures

- Recognizing accommodations as equity, not as “sanctioned cheating”

- Designing universally, so accessibility is built in from the start rather than added as an afterthought

What This Means for the Future

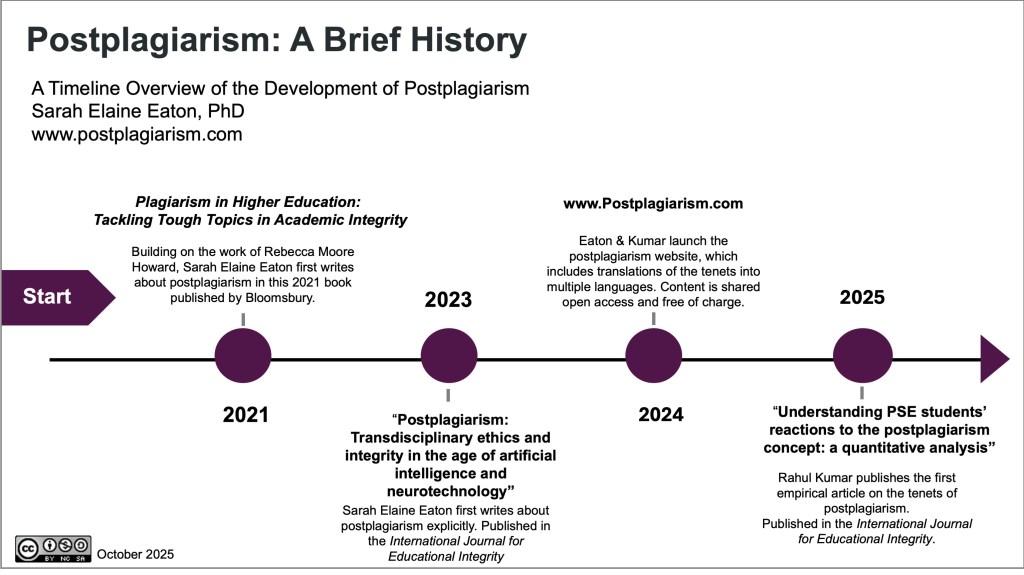

In the postplagiarism era, where AI and technology are seamlessly integrated into education, we move beyond viewing academic integrity purely as rule-compliance. Instead, we focus on authentic and meaningful learning and ethical engagement with knowledge.

This does not mean abandoning standards. It means recognizing that diverse minds may meet those standards through different pathways. A student who uses AI to help structure an essay outline isn’t necessarily cheating. They may be using assistive technology in much the same way another student might use spell-check or a calculator.

Call to Action

My review of existing research showed something troubling: we have remarkably little data about how neurodivergent students experience academic integrity policies. The studies that exist are small, limited to English-speaking countries, and often overlook the voices of neurodivergent individuals themselves.

We need larger-scale research, global perspectives, and most importantly, we need neurodivergent students to be co-researchers and co-authors in work about them. “Nothing about us without us” is not just a slogan, but a call to action for creating inclusive academic environments.

Key Messages

Academic integrity should support learning, not create additional barriers for students who already face challenges. By reimagining our approaches through a lens of neurodiversity and inclusion, we can create educational environments where all students can thrive while maintaining academic standards.

Academic integrity includes and extends beyond student conduct; it means that everyone in the learning system acts with integrity to support student learning. Ultimately, there can be no integrity without equity.

Read the whole article here:

Eaton, S. E. (2025). Neurodiversity and academic integrity: Toward epistemic plurality in a postplagiarism era. Teaching in Higher Education. https://doi.org/10.1080/13562517.2025.2583456

______________

Share this post: Breaking Barriers: Academic Integrity and Neurodiversity – https://drsaraheaton.com/2025/11/20/breaking-barriers-academic-integrity-and-neurodiversity/

Sarah Elaine Eaton, PhD, is a Professor and Research Chair in the Werklund School of Education at the University of Calgary, Canada. Opinions are my own and do not represent those of my employer.

Posted by Sarah Elaine Eaton, Ph.D.

Posted by Sarah Elaine Eaton, Ph.D.

You must be logged in to post a comment.